Bounce Rate in Google Analytics

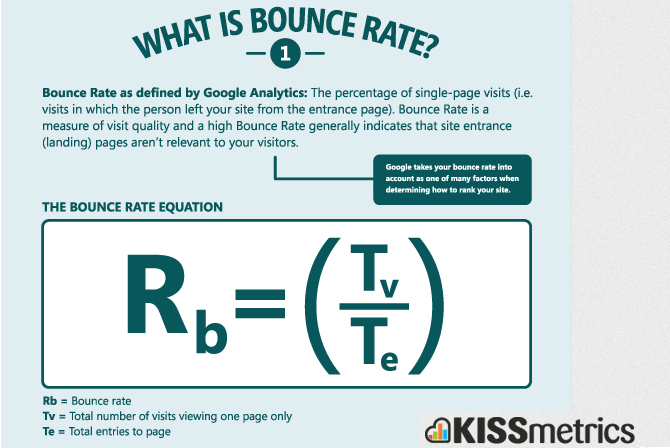

Every few months of so, I see a (re)tweet pointing to this infographic from KissMetrics.

Here’s a snippet:

(Update: to learn about content engagement and calculating time on page in Google Analytics, see this post).

The thing that frustrates me the most about this infographic is that the definition of Bounce Rate is wrong. (Well, at least for GA). Yes, I know that the definition is directly from the Google Analytics Help Center. But a bounce in Google Analytics is NOT a visit with a single pageview. A bounce is a visit with a single engagement HIT. (Justin Cutroni has a great post explaining these hit types and how to understand Google Analytics time calculations based upon undertstanding how these hit types work). To briefly summarize here, there are currently 6 types of hits that can be sent to the Google Analytics server.

- Pageviews (sent via _trackPageview)

- Events (sent via _trackEvent)

- Ecommerce Items (sent via _addItem)

- Ecommerce Transactions (sent via _trackTrans)

- Social (sent via _trackSocial)

- User Defined deprecated, though functional (sent via _setVar)

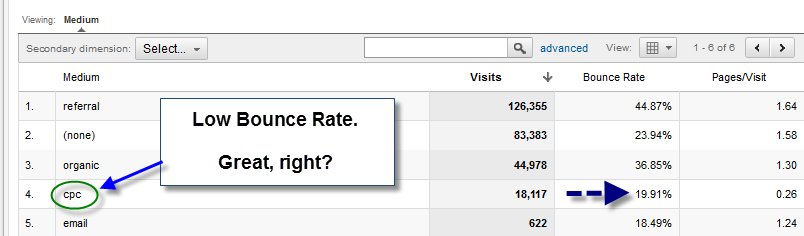

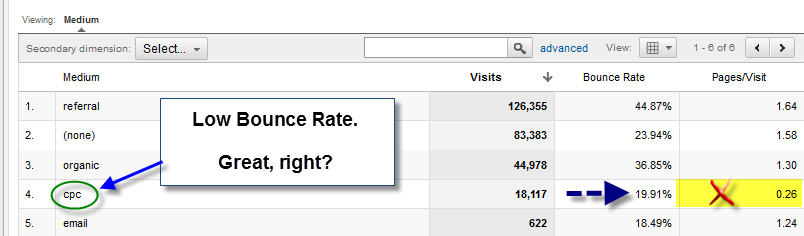

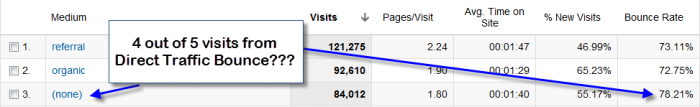

Here is an example of why understanding this technical principal is important when it comes analysis. In the example below, we see that this client’s Paid Search campaigns have a particularly low bounce rate.

However, you might have noticed that something is a little bit fishy here.

Hint:Pages/Visit

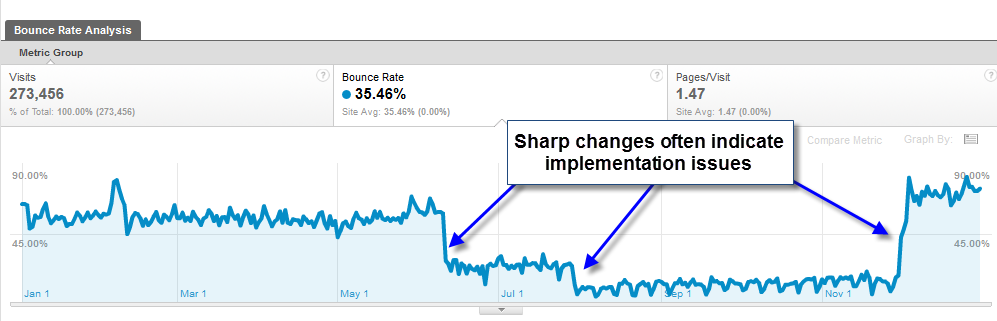

The fact that GA was reporting less than one page per visit is a clear indication that this site has problems with their implementation. Indeed, when looking at the site’s bounce rate over time, we see sharp changes. Big Problem City

Knowing that bounce rate is impacted by the technical issue of more than one engagement hit getting sent to GA is critical to making sure you’re getting your analysis right. It is quite common that developers of software come up with a GA integration that doesn’t take bounce rate into account. The most common culprits I’ve seen are live chat (where the auto-invite sends a Pageview or Event) and auto-plays of videos that are tracked by virtual pageviews or events (where non-interaction was not invoked). The use of a particular live chat application is what caused bounce rate to plummet in the above example. Bounce rate is also impacted by cookie integrity issues which cause sessions to get reset. Additionally, and I’m still surprised by how many times I see this, having the GA tracking code more than once on a page is a sure fire way to bring your bounce rate down to zero. As my friend Caleb Whitmore puts it, “A 3.8% bounce rate isn’t really good, it’s broken.”

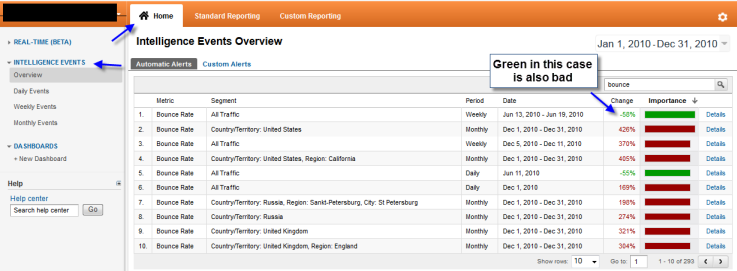

An important side note: As web developers have an uncanny tendency to break GA implementations, make sure you use GA Intelligence Alerts. (After this post, read yet another great post by Justin about data alerts).

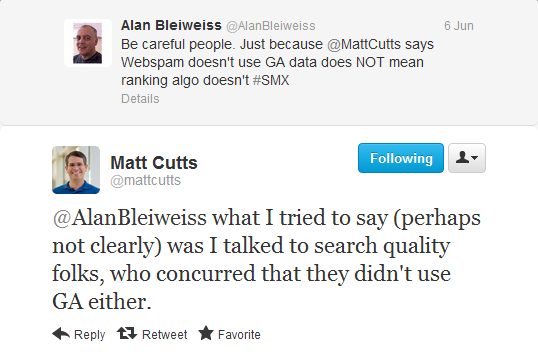

Bounce Rate and SEO

Based upon what we’ve seen above about how bounce rate can be a). Broken and b). Impacted by code, I just want to say that I totally take Matt Cutts at his word when he says that search rankings do not take Google Analytics into account. Google Analytics’ metrics are far too easy to manipulate for the Search Quality Team to use them in rankings, IMHO. Furthermore, there are so many broken implementations that it would be foolhardy to consider pages/visit or bounce rate metrics on a global scale to be reliable as a search quality signal. </my two cents>.

Bounce Rate in Context

One of the most often quoted lines about bounce rate, is Avinash Kaushik’s famous definition, “I came, I puked, I left.” While this definition does hold water a lot of the time, I believe that ultimately it is too simplistic. Avinash certainly makes great points in the video above. If you haven’t seen it before, it is 4 minutes and 45 seconds of classic Avinash awesomeness. I appreciate that with that quote Avinash is trying express something deeply powerful through a crystallization of a concept. Nevertheless, when bounce rate is looked at in a monolithic fashion without exploring the nature of the site or page types, it is quite possible to draw incorrect conclusions about user behavior on one’s site.

For example, the following site publishes a lot of content multiple times per day. They get a lot of traffic, and have a “high” bounce rate. Even the Direct Traffic to the site bounces at a high rate.

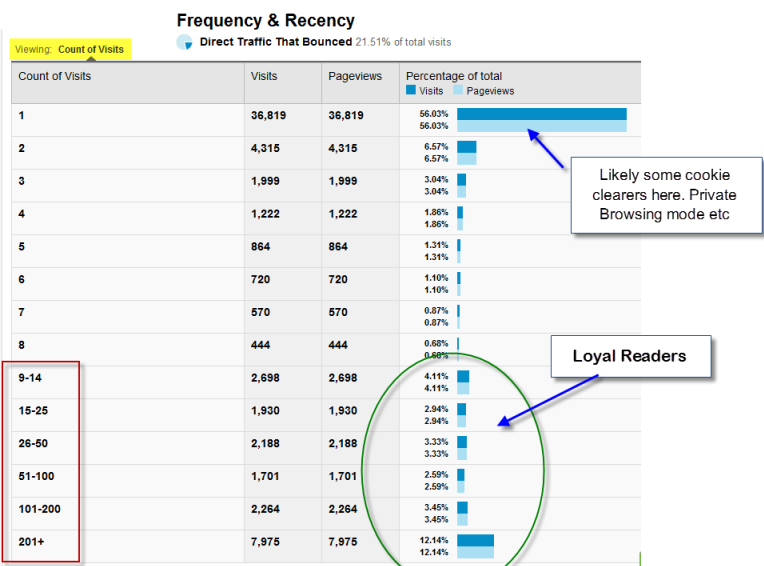

Creating an advanced segment for Direct Traffic that Bounced and applying it to the Frequency & Recency reports, reveals a completely new perspective on the nature of this traffic.

Almost 30% of the Direct Traffic that bounced was from visitors who had been to the site 9 or more times. It certainly does not seem like these people were puking and leaving. Indeed, most of the time that I find myself at Avinash’s blog, I spend a significant amount of time reading an article and then “bounce.” A measure of success for content sites is not necessarily if the reader bounces or not, but whether or not they read the article and (more importantly) come back.

Eivind Savio has a great post where he shares a script (originally from Thomas Baekdal) that helps add a tremendous amount of context to traditional bounce rate metrics; namely, it is a relatively complex (and extremely elegant) script that tracks user scrolling behavior. And yes, another shout out to Justin Cutroni who wrote extensively about this in a two part post. Personally, I like Eivind’s approach of using non-interaction events instead of messing with bounce rate. I’m curious what any of you readers think. Perhaps it really is appropriate to change the way that GA “normally” treats bounce rate based upon indications that a person is indeed reading an article. Not sure…

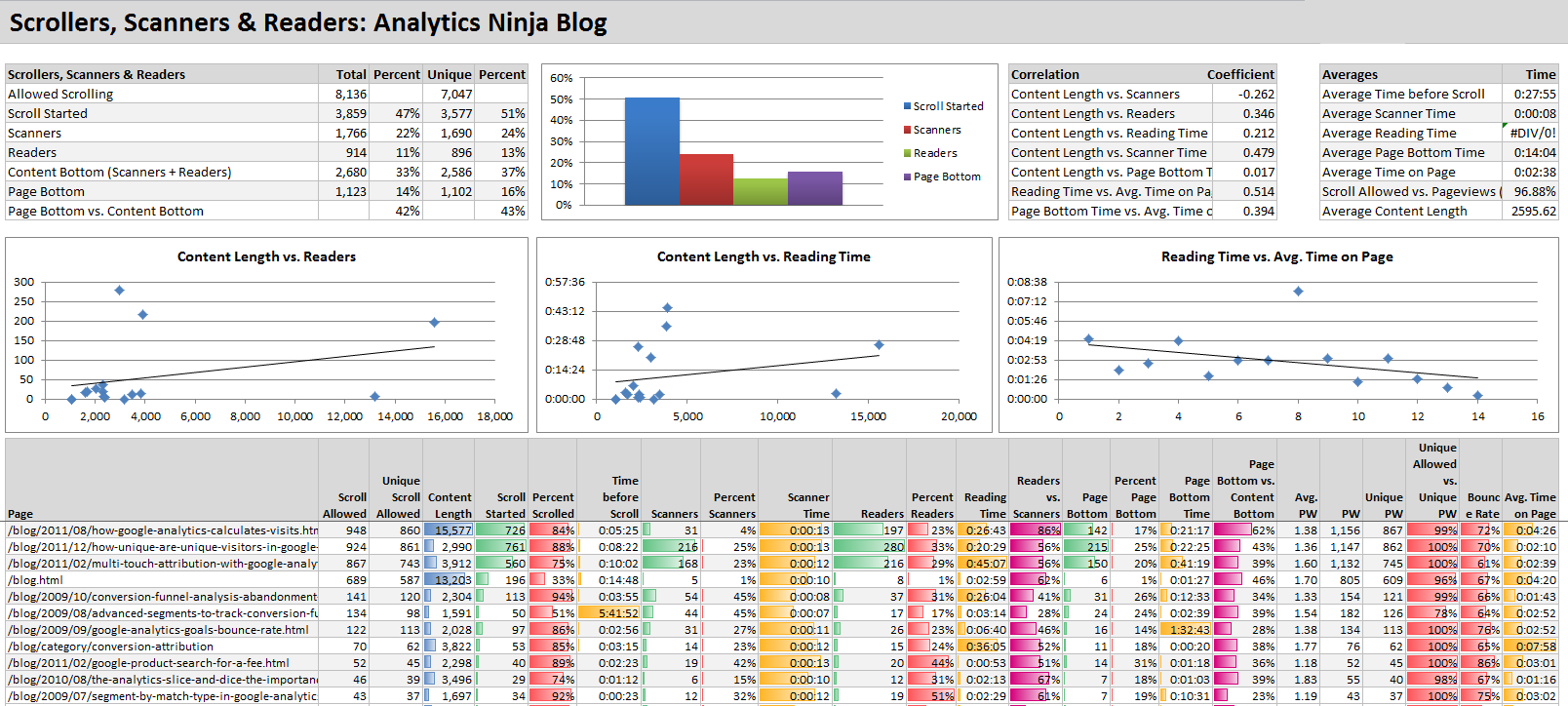

Eivind has put together a wonderful Excel worksheet that allows one to pull all of the scroll data into a dashboard using Next Analytics. Here is some data from this blog.

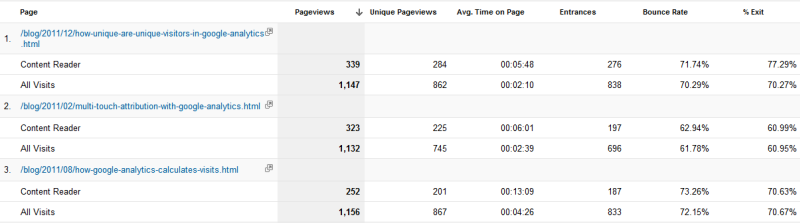

My most popular posts (about the change in how Google Analytics defines a session, about unique visitors, and about multi-touch attribution) all have bounce rates of above 60%. However, between 23-33% of the pageviews had users who scrolled to the bottom of the content section of the post in more than 30 seconds. Indeed, for those posts, more the 75% of the pageviews had some sort of scrolling behavior. If I didn’t have scroll tracking on these pages, I would be stuck with a low time on page metric and high bounce rate. At least now I know that at least a few people are reading my blog posts. 🙂

Interestingly, the bounce and exit rates for “Content Readers” is practically the same as the overall average for those posts. This is just another indication that Bounce Rate is not the “be-all and end-all” metric when it comes to understanding user behavior. Always keep things in context.

I want to end this post by pointing you to an excellent piece by Kayden Kelly from Blast Advanced Media about Bounce Rate. He touches on a number of points that were brought up here, and a lot of other issues (like the difference between bounce rate and exit rate), which I specifically didn’t address because he did such a good job. It is really a worthwhile read.

Great stuff. Thanks for that. I always point out to clients the context of the webpage. If your conversion pages have bad bounce rates or exit rates – time to improve!

Absolutely. In many cases, bounce rate quickly identifies major problem areas. Especially for ecommerce sites, it is one of the first places I look when measuring the performance of inbound marketing efforts. There are other “moving parts,” however, which is why wanted to write this post.

BTW, did you bounce after reading this? 🙂 (The disqus comment engine isn’t integrated into my own GA).

Agreed completely. Bounce rate is an indicator, but, especially for blog posts, it is a different segment of potential buyers, different segment of consumers. I’ve written a blog post today about it:

http://www.webiny.com/blog/2012/06/26/how-to-reduce-bounce-rate-engage-your-audience-and-drive-conversions-with-your-blog/

Great!

I must have been sleeping lately, don’t know how I missed this blog post.

Great write up (and thx for the mentioning BTW).

The “Bounce Rate problem” is something I meet every week. The answer is always “context” or “missing or wrong implementation”.

But as you mention Yehoshua, it’s a great place to start to look when it comes to inbound marketing.

Hello Eivind Savio, dont worry about Bounce Rate now. A magic formula i want to share with you, here is a link. http://www.clickbankonline.com/what-is-bounce-rate-and-how-can-we-reduce-bounce-rate/

NO it is howtradeforex.com

this is just amazing analysis. cheers for all the talented analytic people and programers working around the world sharing knowledge with each other.

Awesome post, as usual, Yehoshua.

I love exploring the tracking script tweaks on sites like your own, too. On a side note, going to give gaCookies() a look into.

Crap. even though i knew our live chat had to be the reason our bounce rate dropped, i was in denial until i read this post. we got bamboozled into thinking our bounce rate was great for over 3 months! thanks so much for the article.

Hi Kate,

Thanks for the comment. Yea, it can be really frustrating to find out that a technical issue is impacting your data. I see it really often across websites large and small. However, it’s really a great thing to identify and fix problems (like it sounds you did). I’m glad you appreciated my article. Thank you.

FYI, that link to blastam.com’s blog is broken. It looks like the URL is no longer valid?

Hi There, Nice explanation on bounce rate. I wrote a post on Bounce Rate and CTR, here is the link: http://baawraman.wordpress.com/2012/12/09/bounce-rate-and-seo/ , It talks about CTR and Bounce rate. Hope it will help readers to undersand Bounce rate further.

Thanks

Salik

THANK you for this. I’ve been trying to figure out why my bounce rate was literally sitting at 0 for two weeks. Although that would be really cool, I know how improbably it is, especially on a site like mine, haha. I found the second code in the header. I have no idea how it got there because I definitely didn’t add it again (in my conscious mind anyway). That should fix it.

I’m still trying to figure out what would be the best heuristic fix for my site. My articles are usually between 2,000 and 7,000 words so I would expect that a lot of people would click out at that point and come back at another time. I need to find a solution that includes time on site (real time on site), which events were beneficial (ads or digging deeper in the site), and if they just opened me up into another tab and did something else for a while like I do to 99% of websites. You’ve now given me more to consider unfortunately. Great article!

Thanks for the nice comment. Adding scroll tracking to your site is a definite first step for answering some of the questions you raised. I would consider having some of the events be interactive, such as reached bottom of page or Content Reader, and not just use non-interactive events across the board.

If you are interested getting time on page / site metrics that do not include time that the user is in another tab, you should consider Personyze which stops the clock when you go into another tab.

If you think that people are coming back to your site at a later time to continue reading a long article, consider setting a custom variable with a time stamp of their first visit so you can see if future visits to the same page are just because of a timed out session. Indeed, with analytics implementations there is always a lot to consider. 🙂

THANK you for this. I’ve been trying to figure out why my bounce rate was literally sitting at 0 for two weeks.

http://www.educationkranti.com

Thanks for the sharing knowledge.

Vijay

http://rightern.com

Thanks for the sharing! Am curious how did you configure the ‘Percentage of total’ column in your Frequency & Recency table to appear in google analytics?

Wow! great info. thanks for clearing that up.

I have a question though. I switched domains, and didn’t update the tracking code until a few days after. Now I’ve got ~70% bounce rates (previously ~3%).

Is my implementation broken? Do you have an idea how I can check? or what a possible solution might be?

You definitely have a tracking code issue –> on the OLD site. You most likely had 2 snippets of the code (though there can be many different issues). A ~3% bounce rate is suspect.

Ah! the new code was much cleaner. Thanks Yehoshua! 😀

great and informative post

Great article, stumbled across your blog by accident, but I’m having fun reading your stuff thus far. I think the thing that infuriates me about bounce rate is that it’s so subjective. Google defines it one way, many users define it as something different. For instance, many people come to my site to solve problems that they are having. Once they read up on how to solve the problem, they leave. While google considers that a bounce, the user stays on my pages for an average of 27 minutes, and they often come back to reference the same page in the future. I had to add in some JS code to tell analytics that the user is reading the page just to get it to record bounce rate the right way for me. Taking my previous example, many of my users come, find my articles, read them, and leave. Later on they come back and read again…then, many of them (and i mean this) decide to explore the rest of my site. Even though they were a bounce the first time and even the second time according to Google, they actually become loyal readers and visit my site looking for new articles every day. Just my 2 cents.

Rich –

Don’t get so infuriated! 🙂

Something that your comment illustrates is the need for digital analytics implementations to match each site / business uniquely. If you’re able to determine the ways that your site gains readership and that includes custom javascript to understand their reading behavior, that is not a short-coming of GA. That is simply the way the product works. In other words, the information one sends to GA is *supposed to be* configured / aligned with the use cases of particular sites. Advanced scroll tracking for blogs or content oriented sites is quite important for publishers, but doesn’t do much for ecommerce.

Yes, Google Analytics does have default settings. But anyone who simply copies and pastes the basic snippet onto their site is sorely missing out on what the product is capable of.

In any case, it is nice to hear that you’re looking at your bounce rate in context, and learning about your user behavior in a deeper way.

Yehoshua

Nice write up. Lots of great info. Our site was redesigned to have less pages and be nearly single page for to improve the user experience with mobile and tablet. This has caused a big issue with bounce rate and rank in my opinion. Trying to fix it.

@CampusMedia:disqus

Rank is one thing, bounce rate is another. You can change your analytics implementation to more closely measure your users’ interaction with the page, and thereby make bounce rate more in line with user behavior (for example, adding event tracking to internal clicks on anchors, etc).

Regarding SEO, that’s something that you’ll need to explore separately.

Great post and information. Any idea on how bounce rate is defined in GA on mobile and tablet?

A great article and explanation of bounce rate, a common and very important issue which most of us face.

http://gadgetworld-mania.blogspot.in/

Brilliant stuff. I’m only starting to mess around with G.A. but I’ve already read other “courses” online. They pander to the widely-accepted idea of bounce rate – thank god I chanced upon this.

Btw, do you have a tutorial how I can set this part up:

“Creating an advanced segment for Direct Traffic that Bounced and applying it to the Frequency & Recency reports, reveals a completely new perspective on the nature of this traffic.”

Hello Yehoshua,

I have just added a second UA in my page and got a 99% bounce rate in the second account (the new one).

Do you have a clue about the reason and a possible solution?

When saying ‘t2._setDomainName’ do must I say none or .mydomain.com?

Thanks a lot

Javi

Why is my bounce rate under the “Audience” section in Google Analytics different than my bounce rate under the “Behavior” section?

Dear Beloved,

I am Mrs. Maria Teresa Romero aging widow suffering from long time illness. I have some funds I inherited from my late husband, the sum of £703,000.00 GB Pounds. For a very personal reason, I needed a very honest and God fearing person that will use the fund for missionary work. Please if you would be able to use the funds for the work of God, kindly reply me by introducing yourself. And I will explain why I need an anonymous individual outside my family. God bless you.

Thanks and hope to hear from you soon, contact me with my private Email: [email protected]

Yours in the Lord,

Maria Teresa Romero

Really i love this blog because it displays image and by that image i will better understand about this topic and try to compare my actual site data with this data and find difference.

I am working with a website having bounce rate 0%. We don’t use cookie in the website and there is no issue of duplicate analytics code in index file. What could be the reason for showing 0% bounce rate?

Hi @Yehoshua Coren I’m in serious problem. I’ve a blog related to how-to and best gadgets the most of the traffic I was getting 2 days back was on guides’ pages and my sites’ bounce rate is 84% on average. My user just open up 1 page and follows the guide and leave it but my avg time on articles is 2 to 5 minutes. But google has penalized my blog and I’m sure it’s panda because my friend with 80% of bounce rate also got penalize by panda on the same same. What should I do now? I’m seriously confused. 🙁 Please help.

Bounce rate doesn’t impact SEO and it is not part of the Panda algorithm.

Bounce rate does has impact on SE ranking factor and it’s a part of penguin algorithm. (This is to avoid unnatural backlink and irrelevant visits.

Try Fruition software to check, By which algorithm your blog has been penalized.

Link plz?

thank you

Great article, I visited another page so I didn’t count as a bounce for you 😉

Great article. When I first read about bounce rate I didn’t agree it should be low and my website topic is an exception. My one site http://www.brightverge.com had 32% bounce rate and I decided to improve the quality of the page and bounce rate was up to 40% so you would think that is worse result but actually average

time increased from 5 min 49s to 7 min 27s so in my case I believe this was a good result and I just need to work on the bottom part of my article so users can navigate to related article. I also have a site http://www.gocime.com with bounce rate 83% and avg time 5 min which is a solves a very technical issue and to be honest I don’t think any visitor would like to go to another page as they have an urgent issue they must resolve quickly so I don’t think I

will be improving bounce rate in this case….

But overall I do want to reduce bounce rate but only by improving quality of my pages to my users.

Wonderful blog & good post. It is really helpful for me, awaiting for more new post. Keep Blogging!

White Hat World | White Hat Worlds | Search Engine Optimization | White Hat SEO []][]]

Great post! We have a custom browser on which we load a start page from our domain. So every time someone uses our product, we get a bounce because they don’t always actually do anything on the start page. Is there a way to tell Google to ignore these views, but still get analytics on when someone enters a purchase flow from that start page?

Thanks for sharing! I didn’t know that the data could be biased because of the Live Chat. I use both Google Analytics and Live chat and the data from the last one gets forwarded automatically to the first. Never noticed anything strange but I will pay more attention from now on.

Nice minimalist way to track activity on page (with or without applying interaction to kill bounce rate): http://riveted.parsnip.io/

I see a recent Analytics Ninja post addresses active time on page too: https://www.analytics-ninja.com/blog/2015/02/real-time-page-google-analytics.html

PS

“why understanding this technical principal is important” –> principle

How to reduce bounce rate by one simple trick? Any trick to engage users???

I genuinely appreciate your analysis. It explains something I’ve notice with my blog (MediaVidi.com).

As my blog traffic has gone up, so has my bounce rate!

After reading your analysis, I realize much of my traffic comes from “frequent fliers” who return to my blog when I Tweet about new content it or I email my subscribers.

Once someone has looked around my blog once, there’s no reason to stick around after they read a new post. So a high bounce rate can simply be a symptom of a loyal following!

Also, I use my blog to generate traffic for the website for my startup (MondoPlayer.com). When people come to my blog, my primary goal is to send them to my website. Anyone who clicks to my website after just reading 1 page of my blog counts as a “bounce”, but they certainly don’t count as a “puker”. 🙂

After reading your analysis, I’m going to look at all my metrics differently. Good job!

Nice team you have ,

seems you people creates awesome graphics , good bless you guys

I am a game developer and i like to play games ?

If you people love to play games you should visit once…

http://www.rsgoldaz.com/

to buy RuneScape gold games

http://www.viprsgolds.com/

That’s a great post! Informative, interesting and well-written. Here’s something that you might be interested to take a look at and get some more insight into bounce rate:

http://www.mavenec.com/blog/bounce-rate-google-analytics/

Pou

Games – Play the best Pou Games online free for everyone!

We update Nobita games, Cricket Dress Up games, Cricket Fisshing games,

all Cricket games online. Cricket-games.me

My site is very low bounce rate

please help me 🙁

http://fsp-co.com

You don’t even have GA on your site.

Yehoshua – what about my site with 5% bounce and avg pages/session: 8 ?

http://www.agtrader.com.au

There’s a special header added to requests by ajaxsnapshots when it loads a snapshot

We filter out those requests

I also have a website but I don’t know about bounce rate and neither I checked it. After reading your post I checked the Bounce Rate of my website and it is too high. Now i am working on it to control the bounce rate. Thanks for sharing such informative post.

The Ninja in your icon appears to have a fencing foil with a blade guard instead of a katana.

Nice article, though.

For more infromations, please check the following link. You might find it useful because it is related to Bounce Rate

http://www.mavenec.com/blog/bounce-rate-google-analytics/

I typically find the best bounce rates are between 50 and 65%. Does that sound right?

This is very informative. Definitely going to use this on my website http://www.smartappreview.com/

This is amazing post I have been seeing my bounce rate of 84% in google analytic for my blog http://habeshaentertainment.blogspot.com/

Thanks to you I’m working on it!!!

Awesome write up – definitely learned a lot. And to think this article is YEARS old! Where have I been?

As important and accurate now as it was 4 years ago, thanks

Just figured out why my bounce rate is zero because of this, I had double implementation from google tag manager and an internal SEO plugin Thanks for the article.

Perfect article!!! it has all the information for some one to start searching how to understand who the visitors behavior in the website.

My website Bounce rate 48%, it’s good or bad for my site ? http://www.editworks.co.in/