Anyone who works with Google Analytics should be using BigQuery for their analysis and reporting. There, I said it. Hit level, raw event data is your best source for whatever you want to report, if you want any accuracy at all. Want to exclude employee traffic? Easy. Found some bots? They’re gone. But this isn’t an article about why you should use BigQuery. It’s an article about cost.

The GA4 export is less than ideal. It’s date sharded instead of partitioned and it’s not clustered. This means that when we create our intermediate, incremental analytics tables that we’re scanning large amounts of unnecessary data, as we exclude different events from different intermediate tables to save on costs further down the line.

To reduce the cost in doing this, we now cluster our GA4 tables as soon as they arrive in BQ. We’re using Google Workflows as our orchestration platform to make the necessary API calls to automate the clustering for GA4 exports for 15 properties that are exporting to the same project.. We could use Cloud Functions, but we only need to make a couple of API calls, so it would be a bit overkill, the execution by Workflows is significantly faster than Cloud Functions and as we’re not doing any data handling we don’t need the full runtime.

Workflows is a great platform for orchestration, as the complex parts of the execution, such as authorisation, error handling and retry are moved into simple YAML. It’s also basically free, you get 5,000 free internal steps per month, and every 1000 steps after costs $0.01. We’re only using internal steps to call Google APIs, making external HTTP requests costs more, but still only pennies. This means you can change 50 tables per day at zero cost!

For those of you who, like me, don’t care about the blog post and just want the code, here it is. If you know what you’re doing then feel free to take it and run! Looks complicated? Don’t worry, keep reading below and we’ll go through it and break it down.

main:

params: [event]

steps:

- extract_data:

assign:

- base64: ${base64.decode(event.data.message.data)}

- message: ${json.decode(text.decode(base64))}

- project: ${message.protoPayload.serviceData.jobCompletedEvent.job.jobConfiguration.load.destinationTable.projectId}

- dataset: ${message.protoPayload.serviceData.jobCompletedEvent.job.jobConfiguration.load.destinationTable.datasetId}

- table: ${message.protoPayload.serviceData.jobCompletedEvent.job.jobConfiguration.load.destinationTable.tableId}

- cluster:

call: googleapis.bigquery.v2.tables.update

args:

projectId: ${project}

datasetId: ${dataset}

tableId: ${table}

body:

clustering:

fields:

- "event_name"

- query:

call: googleapis.bigquery.v2.jobs.insert

args:

projectId: ${project}

body:

configuration:

query:

query: ${"UPDATE `"+dataset+"."+table+"` SET event_name=event_name WHERE true"}

useLegacySql: false

What does it do?

The workflow above is pretty straight forward, it doesn’t loop or have any conditions, it just runs some steps in sequence. For this workflow there are three steps.

- Extract the table data from the message.

This is an event driven workflow, that runs when the table is loaded. This means that we can use a single workflow for multiple GA4 properties in the same GCP Project, and even redeploy the same workflow in multiple projects with no code changes.

The data comes in in a base64 encoded format, so the first step is to decode that. Once we have the text we can decode it into JSON so we can access the attributes by key.

In this workload, we’re extracting the Project ID, Dataset and Table ID from the message. You could hardcode these values in the workflow, but by fetching them from the message we can use the same code for multiple tables. - Tell BigQuery to cluster the table by event (and any other columns you want)

The GA4 Export is date sharded, not partitioned. This means that every day has its own table and its own metadata. To cluster the table, we need to update the clustering specification separately for each day. (If the tables were partitioned then the clustering specification would persist and new data would automatically be clustered… just a thought @googleanalytics!)

If you want to add additional columns to the clustering specification, you’ll need to add them to the fields array in the order you want to cluster in. - Cluster the data

Even when we’ve updated the specification, the data aren’t clustered. We need to update the table to cluster the existing data. This is done by running a simple UPDATE query. Again, if you want to add additional columns then add column_name=column_name to the query.

NOTE: IF you’re running this on existing data, it will revert any tables in Long Term storage to Active Storage and reset the 90 day time period, so you might want to consider your cost savings on your queries vs the storage costs.

Getting it running

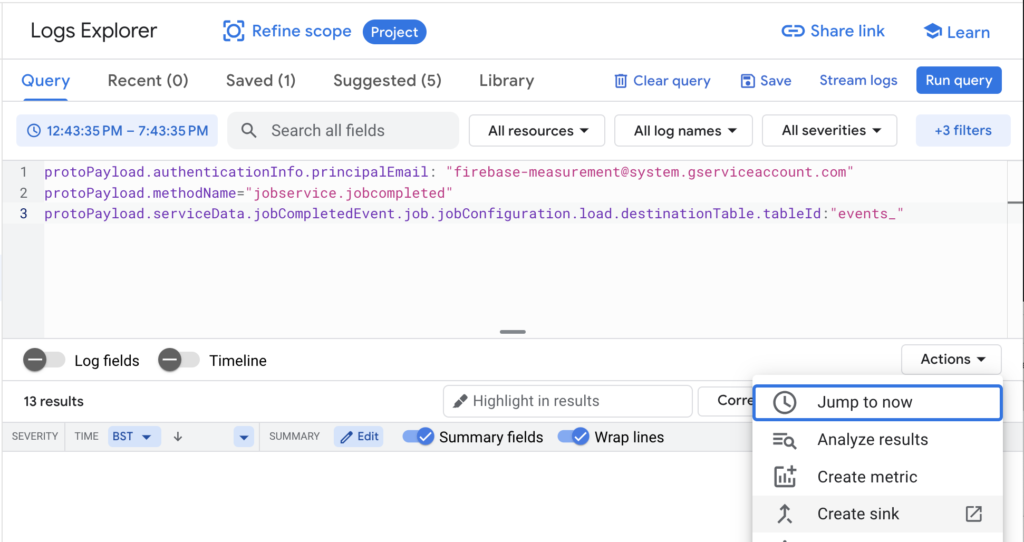

This workflow is designed to be triggered by a PubSub Event. As our GCP project is the home of multiple GA4 properties, we’ve created a generic Log Router and Sink from our GCP Logs to trigger the pipeline whenever the table loads. You can create a Sink directly from the Logs Explorer using the query below.

Set the Sink destination to a Cloud PubSub Topic, make sure you create a new topic, so you don’t trigger the workflow unnecessarily. This also ensures that we are sending the correct logs to the workflow so we can pull the variables out of the message body.

protoPayload.authenticationInfo.principalEmail: "[email protected]"

protoPayload.methodName: "jobservice.jobcompleted"

protoPayload.serviceData.jobCompletedEvent.job.jobConfiguration.load.destinationTable.tableId: "events_"

Deploy the workflow in Workflows, using the PubSub Topic you created above as the trigger, and ensure that the Service Account you’re using to run the Workflow has at least roles/bigquery.dataEditor on the GA Dataset and roles/bigquery.user or roles/bigquery.jobUser to run jobs.

As with everything you do on GCP, ensure that you have monitoring and alerting set up so you can be sure that your jobs are running correctly.

If you’ve got any questions about setting up your workloads in GCP, automating your reporting or managing your cloud infrastructure then get in touch and we’ll be happy to walk you through it.

This is awesome!!!